Diff Transferer

Any speaker adaptive Text-to-Speech with diffusion

In the course project, we designed a zero-shot any-speaker adaptive TTS (Text-to-Speech) model that aims at synthesizing voices with unseen speech prompts. With a given text, and a few seconds of audio from unseen speakers as input, the model is capable of synthesizing speech of their styles.

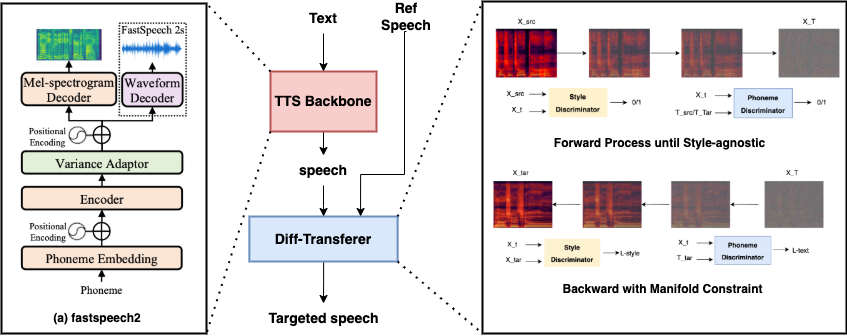

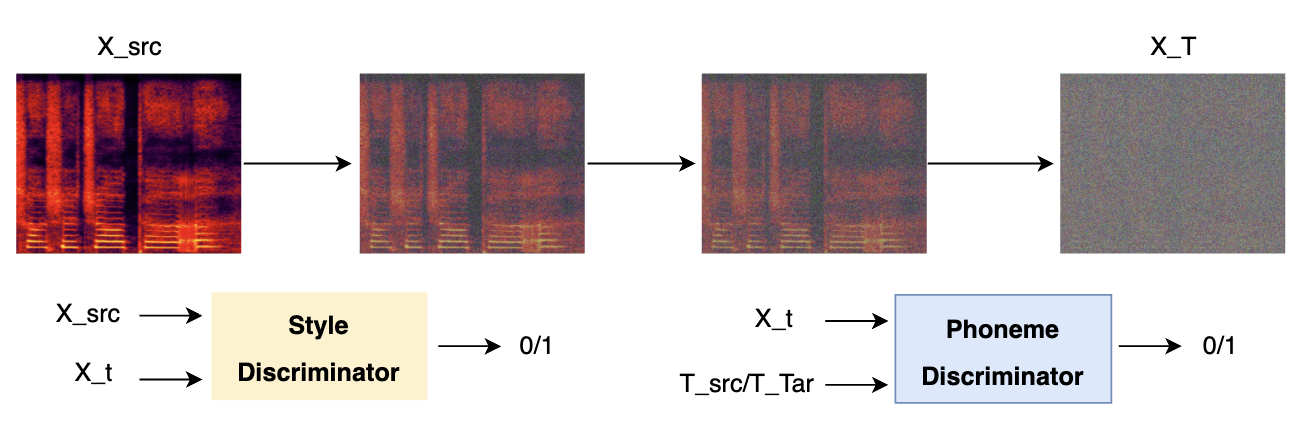

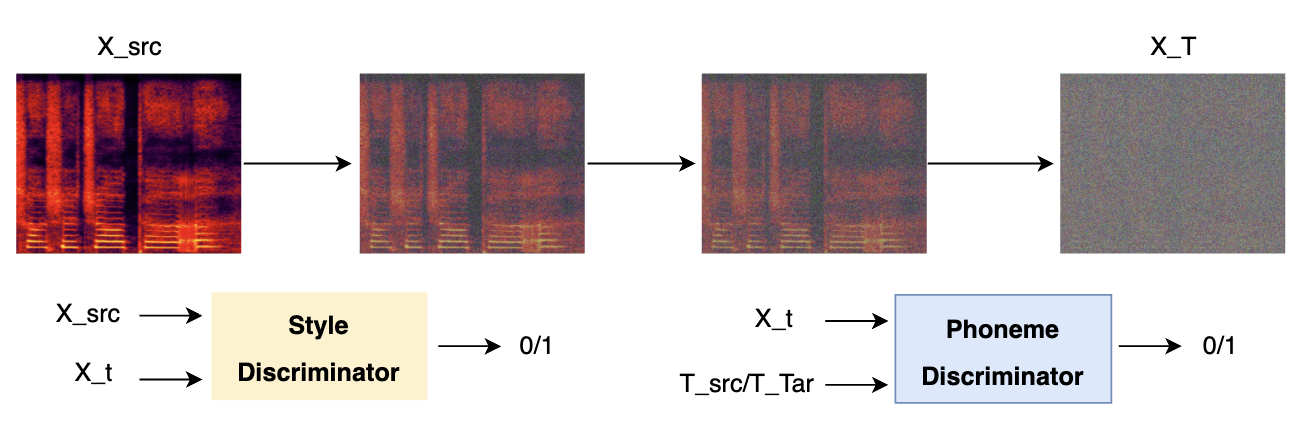

Specifically, we 1)design a style encoder and phoneme encoder and try to decouple the content and style from a speech; 2)and train a conditional diffusion-based style transferer with manifold constraints, which could transfer the original speech generated from a current TTS-backbone to the target style; 3)Leverage a shallow diffusion mechanism to speech up the training. With these designs, our model is suitable for fast adapt to various unseen speaker domains.

Besides, we also try to extend the zero-shot any-speaker adaptive TTS model to an any-singer adaptive SVS (Singing Voice Synthesis) one. Singing voice is more complicated with not only the speech style but also some rhythm, chord, and singing technique styles.

We leveraged a text to speech backbone to generate speech from the given text, then used conditional diffusion model to transfer the original speech generated from a current TTS-backbone to the target style.